How adaptive bitrate streaming impacts live stream latency?

A common assumption in video tech is that adaptive bitrate streaming (ABR) is mainly for on-demand playback where users watch pre-recorded content and the player has time to buffer and adapt. But that’s only half the picture.

ABR is just as critical in live streaming, where viewer networks vary wildly and the margin for buffering is razor-thin. Without it, you’re forced to pick a single bitrate for everyone either too high (and risk stalling) or too low (and sacrifice quality). Neither works at scale.

In this post, we’ll explore how ABR works in live scenarios, why it often introduces latency, and how to configure your pipeline to deliver smooth, real-time playback without compromise. You’ll also see how FastPix handles this under the hood making low-latency adaptive live streaming possible with minimal setup.

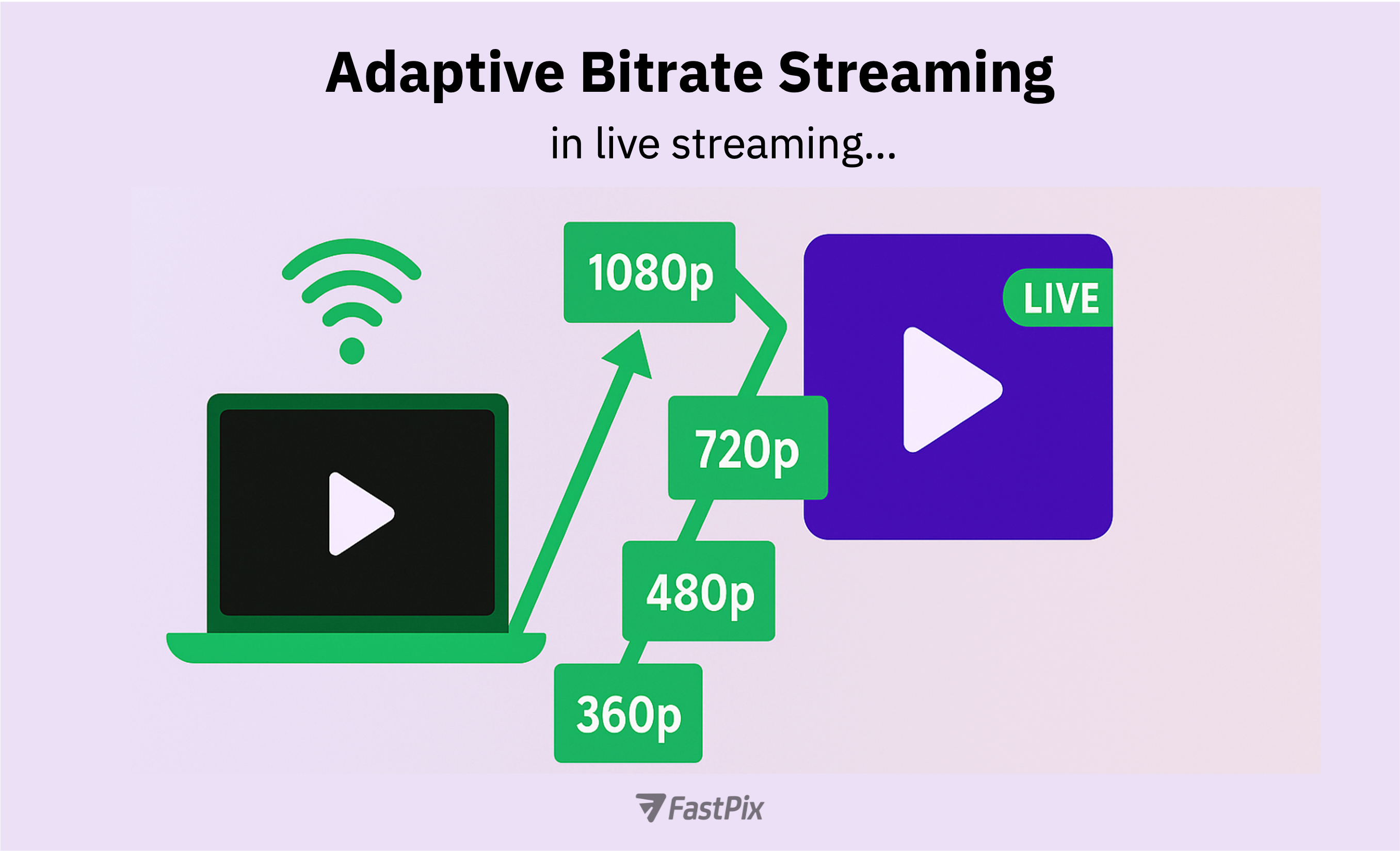

Adaptive Bitrate Streaming (ABR) is a playback technique where the video player adjusts quality on the fly based on the viewer’s network speed, device performance, and buffer health. The goal is simple: deliver the best possible stream without buffering, even on unreliable connections.

How ABR works

This dynamic switching keeps playback smooth as bandwidth fluctuates. But that adaptability isn’t free it often introduces latency, especially in live streaming where every second counts.

Live streaming latency is the delay between something happening in real life and the moment a viewer sees it on screen. With ABR-enabled live streams, that delay often lands somewhere between 10 and 30 seconds even when everything’s working “fine.”

So what’s slowing things down?

The usual suspects behind live latency

Even when the stream is stable, these defaults quietly stack up. The result? Your “live” stream is often 15–30 seconds behind reality and viewers notice.

Live video delivery has one unavoidable problem: every viewer’s network is different. Some are on fiber. Others are watching over congested 4G or shaky hotel Wi-Fi. And without adaptive bitrate streaming (ABR), you’re stuck choosing between two bad options:

ABR solves this by letting the video player make real-time trade-offs. It picks the highest quality the viewer’s connection can handle right now and adapts seamlessly as bandwidth changes. That means no hard-coded quality decisions, no one-size-fits-all playback.

From a developer’s perspective, ABR is essential for live streaming because:

Bottom line: ABR isn’t just a feature it’s the only way to make live video work across the messy reality of global internet connections.

Adaptive bitrate streaming improves playback quality but it also adds latency, especially in live streams. And the biggest culprit? Segment size.

Segment size drives delay

Players can’t start playback or switch quality levels until they’ve downloaded a full segment. Bigger segments mean longer wait times. Here’s how it plays out:

FastPix supports chunked CMAF and sub-2s segmenting out of the box so you can keep latency low without sacrificing playback smoothness.

Even with optimized segments, your player settings can introduce unnecessary delay.

Popular players like hls.js, Shaka Player, and ExoPlayer are often tuned for buffering safety, not speed. Defaults like maxBufferLength, liveSyncDuration, and liveMaxLatencyDuration can quietly push live latency higher than you’d expect.

Without tuning, you’ll likely see:

To reduce ABR-related latency, two technologies stand out:

CMAF (Common media application format)

CMAF allows chunked transfer of video segments. Instead of waiting for an entire 6-second segment, the player can start rendering as soon as it receives small chunks cutting startup time and improving adaptive switching responsiveness.

Low-Latency HLS (LL-HLS)

LL-HLS extends traditional HLS with partial segments and faster playlist refreshes. Combined with a tuned player, LL-HLS can achieve <4s latency while keeping ABR fully functional.

With FastPix, both CMAF and LL-HLS are supported natively, giving you low-latency live playback with full ABR control no extra setup required.

Not always. It depends entirely on how your stack handles ABR behind the scenes.

How FastPix handles ABR by default

With FastPix, ABR is built into the live pipeline no manual setup, no guesswork.

You don’t need to:

Every live stream is:

And because FastPix supports LL-HLS and chunked CMAF out of the box, your stream stays responsive even under poor network conditions without trading latency for reliability.

In short: ABR doesn’t have to slow you down. When implemented right, it just works.

Getting adaptive bitrate streaming to work well and stay low-latency requires more than just enabling ABR. You need the right protocols, smart defaults, and real-time visibility into what your stream is actually doing. Here's how to get there.

1. Use LL-HLS or chunked CMAF

Traditional HLS waits for full segments before playback begins. LL-HLS and chunked CMAF break that rule by allowing partial segment delivery cutting startup time and switching lag.

FastPix supports both out of the box, with minimal setup. Just enable them in your live stream config and you’re good to go.

2. Keep segment duration short

Shorter segments = faster playback.

We recommend sticking to 2-second segments or less. This helps reduce:

FastPix automatically packages your live streams into short segments and supports chunked delivery for even finer control.

3. Tune player buffer settings

Most open-source players (like hls.js, Shaka Player, and ExoPlayer) ship with conservative buffer defaults great for stability, not so much for latency.

Here’s a sample config for hls.js:

js

hls.config = {

maxBufferLength: 6,

liveSyncDuration: 3,

enableWorker: true

};

These values aren’t fixed tune them based on your use case. Lower buffers improve latency but may increase the risk of stalling under bad networks. The key is to test under real conditions.

4. Monitor what actually matters

You can’t fix what you can’t see. FastPix gives you real-time visibility into:

This lets you optimize based on real playback data, not guesswork.

Adaptive Bitrate Streaming is essential for delivering smooth playback but without the right setup, it almost always introduces latency.

If you want low-latency ABR, here’s what you need:

Whether you're streaming a live event, a sports match, or a product launch, FastPix gives you everything you need to deliver a stream that feels truly live with no manual heavy lifting.

Ready to try it? Get started with FastPix and see how low-latency ABR is supposed to work.