In-depth guide to video metadata

Video is everywhere - on your product pages, social feeds, customer support portals, internal training sites. But without metadata, all that content is locked inside a black box. It can’t be searched, sorted, reused, or made sense of at scale.

Even small teams struggle here. A few dozen videos quickly turn into hundreds. File names mean nothing if tags are inconsistent. Searching becomes guesswork.

Take something simple: a cooking channel on YouTube. You’ve published thousands of recipe videos. Now a viewer wants that vegan chocolate cake you made two years ago. How do they find it? Not by scrubbing through playlists. They find it because you tagged it with metadata like ‘vegan’, ‘dessert’, and ‘gluten-free’.

FastPix makes this easier. You can assign metadata tags directly to your videos during upload, or let FastPix automatically extract chapters, transcripts, keywords (named entities), and other metadata using AI - all through a single API. So teams don’t have to rely on manual processes or generic labels, and can actually build features on top of their video content.

In the simplest terms, video metadata is data about data. It's the information that describes and gives context to your videos, making them searchable, manageable, and valuable. Think of it as the DNA of your video content – it carries all the essential information that defines what your video is about.

This includes title, description, tags, and technical details of a video. This information helps systems and humans understand video content, facilitating discovery, organization, and use.

For online videos, metadata often extends to include device information, viewer engagement, player details, geographic location, and network conditions. By analyzing this metadata, streaming platforms can optimize the viewing experience, ensuring high-quality playback and personalized content recommendations.

Four main types of video metadata exist: descriptive, structural, technical and content-aware. Descriptive metadata includes titles, descriptions, and keyword tags. Structural metadata tells systems how to handle video files. Technical metadata includes information about file type, codec, and resolution. Content-aware metadata goes deeper, automatically capturing transcripts, objects, and on-screen text that describe the actual contents of the video.

Writing metadata for videos involves providing detailed and structured information about the video content. Here’s are examples for few types of video metadata to understand this better.

There are two main ways to create or add metadata to your videos in FastPix:

Automatic transcripts, chapters, and labels are great - but most products also need application metadata: the stuff only your system knows, like campaign IDs, entitlement flags, or moderation state. In FastPix, this lives as simple key–value pairs attached to each on-demand video or live stream. It doesn’t change playback; it makes the asset queryable, sortable, and automatable across your stack.

Manually adding metadata in FastPix is through a flat JSON object of string/number values. You define the schema. FastPix stores it alongside the asset and returns it in API reads so your services can use it in UIs, jobs, or rules - you stay in control of meaning and behavior.

1"metadata": {

2 "category": "fitness",

3 "uploader_id": "user_9281"

4}

Attach your keys at the moment the media asset enters the system - easier for indexing and downstream workflows.

1{

2 "inputs": [

3 {

4 "type": "video",

5 "url": "https://static.fastpix.io/sample.mp4"

6 }

7 ],

8 "metadata": {

9 "project": "app_launch",

10 "category": "yoga"

11 },

12 "accessPolicy": "public",

13 "maxResolution": "1080p"

14}

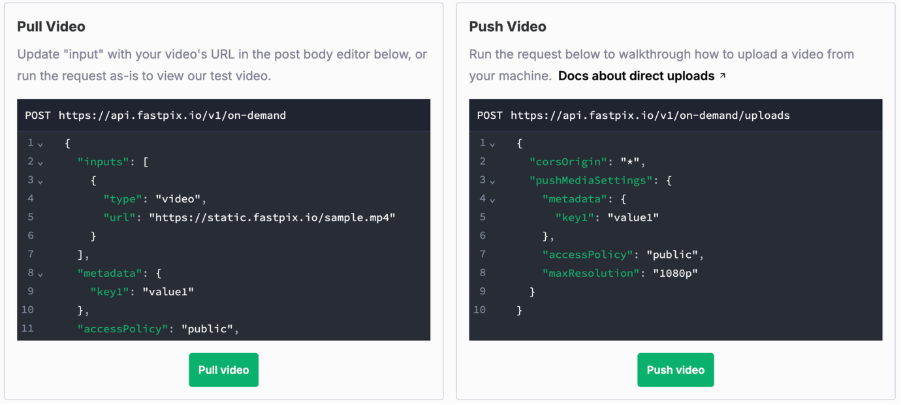

If you’re using the dashboard to upload videos: go to Upload Media → choose Push or Pull → scroll to Metadata → add your key–value pairs → Run.

You can overwrite the object later if something changes (locale, review state, campaign).

Endpoint

PATCH https://api.fastpix.io/v1/on-demand/{mediaId}

Body

1{

2 "metadata": {

3 "language": "es",

4 "reviewed": "true"

5 }

6}

Note: updates are full overwrites - include every key you want to keep; partial merges aren’t supported.

Most teams end up stitching together speech-to-text services, object recognition models, spreadsheets, and manual tags - and still don’t get consistent metadata.

FastPix removes that overhead by letting you create metadata (through In-video AI features) and tagging through a unified API. Here’s how it works:

1. Automatic metadata extraction on upload

When you upload a video to FastPix (via dashboard or API), the system instantly begins processing it in the background. It transcribes speech, detects objects, reads on-screen text (OCR), identifies speakers, and segments scenes - all without any extra setup.

2. Timeline-aware metadata

Each piece of metadata - a transcript line, a descriptive label, or a section - is tied to a timestamp. That means developers can build features like “jump to keyword,” highlight reels, or smart previews without building a timeline engine themselves.

1{

2 "data": {

3 "isChaptersGenerated": true,

4 "chapters": [

5 {

6 "chapterNumber": 1,

7 "title": "Introduction to Blockchain",

8 "startTime": "00:00:00",

9 "endTime": "00:02:30",

10 "description": "Introduction to transactional problems and blockchain."

11 },

12 {

13 "chapterNumber": 2,

14 "title": "How Bitcoin Transactions Work",

15 "startTime": "00:02:31",

16 "endTime": "00:05:30",

17 "description": "Explanation of bitcoin transactions and the mining process."

18 }

19 ]

20 }

21}

22

3. Standardized schema, API-first access

Whether you need chapters, keywords, transcripts, or video tags, everything is accessible in a clean, consistent JSON structure through the FastPix Metadata API. No parsing random formats or mapping fields from 10 sources.

4. Smart tagging and classification

Beyond basic detection, FastPix uses AI models to assign relevant tags (e.g., interview, gameplay, product demo) based on the content, pace, and structure of the video. This powers better organization, recommendations, and internal workflows.

1{

2 "data": {

3 "isNamedEntitiesGenerated": true,

4 "namedEntities": [

5 "Zebra (Animal)",

6 "Gravy (Food)",

7 "Plain Zebra (Animal)",

8 "Mountain Zebra (Animal)",

9 "Africa (Geography)",

10 "Foals (Animal)"

11 ]

12 }

13}

5. Content moderation signals

For platforms that require compliance or content safety, FastPix can surface NSFW flags, profanity detected in transcripts, or sensitive visual elements - directly within the same metadata payload.

Every major video product you use - YouTube’s chapters, Twitch’s clips, Netflix’s recommendations - runs on metadata. Without it, video is just a blob of pixels and sound. With it, you get features, personalization, monetization, and debugging tools that make your app usable.

So the question isn’t whether you need metadata. It’s whether you want to wire the whole system yourself or use one that already treats metadata as first-class.

Want to see how it works? Sign up today to try FastPix with $25 free credits and see how easy it is to build powerful video features.

Properly optimized metadata can improve a video's visibility in search engine results, leading to higher engagement and viewership. By including relevant keywords and descriptions, videos are more likely to appear in search queries related to their content.

Yes, with FastPix you can update metadata for any asset at any time using the API, without having to re-upload or re-transcode your video files. This lets you add, change, or remove tags, custom fields, or even timed events on existing assets—even at large scale, using batch API calls or scripts.

You can set up FastPix to automatically run enrichment workflows (like AI transcription or tagging) on upload. This is configured via the API or dashboard, so your assets are processed and enriched without manual intervention.

Yes, FastPix supports webhook notifications and can call your endpoints whenever metadata is created, updated, or deleted. You can use this to trigger automations-like sending alerts, starting video edits, or updating recommendations-based on live metadata events.

You can pull complete metadata (including timed events) via FastPix’s API, then transform and export it as JSON or CSV for use in analytics pipelines or BI tools. For large-scale exports, batching and pagination are supported.